The Making of Bones of Yew: part 01

Why sourced Input Data Defines the Best in AI Filmmaking

As AI filmmaking continues to explode creatively and technically, one thing remains clear: what separates good from exceptional work is the data you feed it. and When we say “You” we mean YOU! the film maker

At XOlink, we believe the future of AI-assisted storytelling hinges on attention to input. The most advanced models in the world can only work with what they have, so what can you build on top?

if you give them noise, they’ll give you noise back… But give them something rich, textured, real , and suddenly, you’re making cinema.

That’s the philosophy behind Bones of Yew. Yes, it’s an AI film, but it’s also a deeply physical one.

Getting Into the Field

We went out into the ancient yew forests of England and documented them ourselves. These trees, twisted, towering, some thousands of years old. formed the symbolic and aesthetic heart of the project.

We built a dataset of their natural forms:

From this, we built a high-resolution dataset of their natural forms: chaotic, beautiful, weathered by time. Using this dataset, we trained custom LoRA (Low-Rank Adaptation) models, essentially creating a new pigment to paint with, one rooted in real bark, branch, and canopy.

The result? Subtle, but powerful.

Our majestic tree shots were all informed by what we sourced

at the film’s end we used this technique to push the creation in new ways, a strange and striking deer appears. its form subtly shaped by the same yew data. Its silhouette and antlers aren’t generic fantasy; they carry the ghostly, twisted geometry of the yew itself. In that moment, AI doesn’t invent. It reflects. And what it reflects is ancient, wild, and deeply grounded in place.

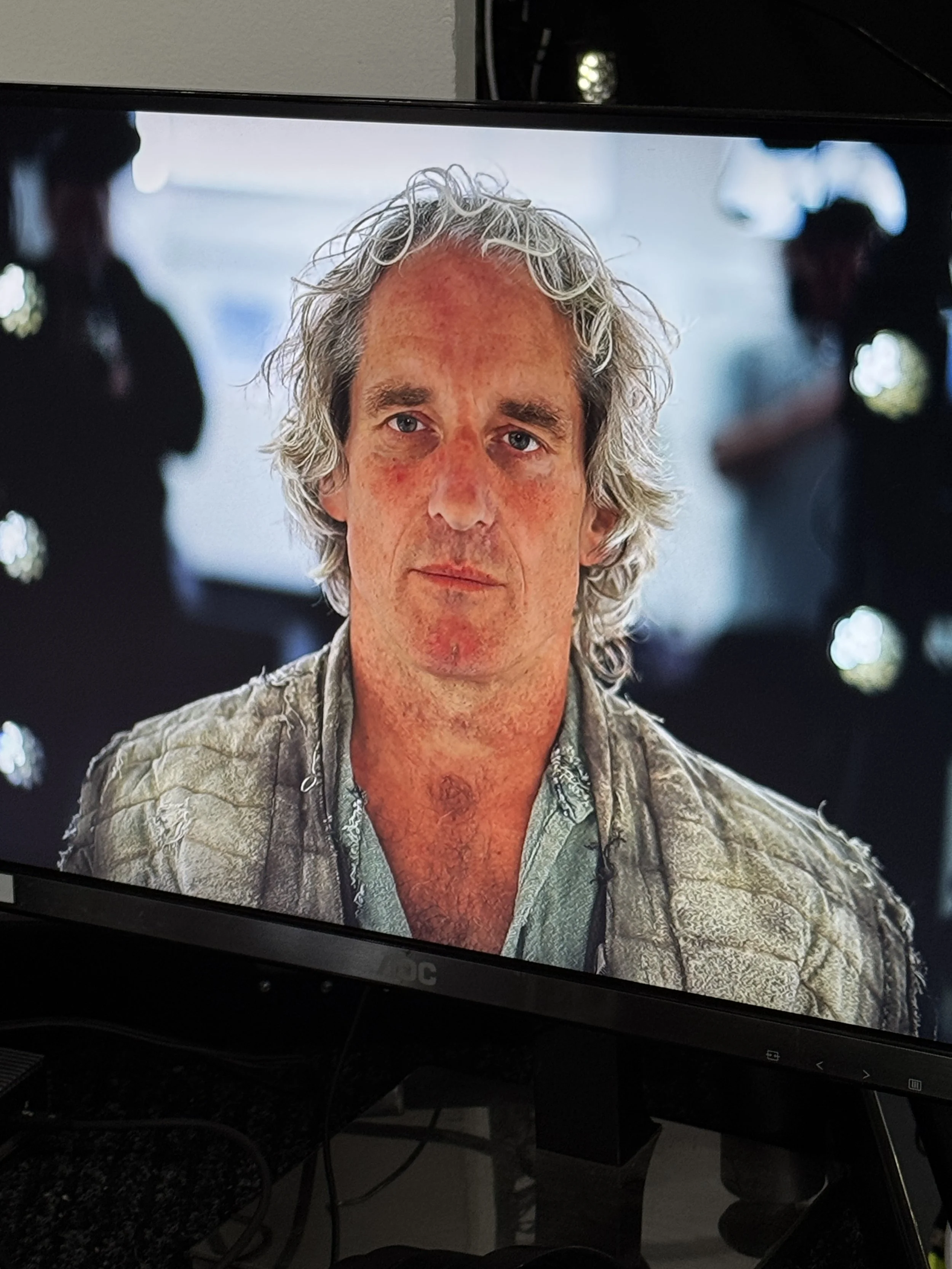

recreating Matt Lewis: Precision at Scale with Visualskies

To bring our human performance into the same world of authenticity, we partnered with the incredible team at Visualskies, who provided access to their 3D human scanning rig, a cutting-edge photogrammetry system designed for high-speed, high-fidelity capture.

In a single session, we were able to capture Matt Lewis in full,

generating volumetric data of his posture, face, and 3 different costumes, all with millimetre-level accuracy. This rig allowed us to scan quickly and at scale, making it possible to work within the demands of production without compromising on quality.

This kind of mass-capture precision meant that even after passing through the layers of AI processing, Matt’s physicality and nuance remained intact , his presence anchoring the film emotionally, even when visually transformed.

What’s more, by training a LoRA on this base data, we were able to age him across the film with realism and continuity , subtly shifting his features, posture, and presence as time passed in the story, while preserving the core of his scanned likeness. The result is an AI-driven transformation that still feels deeply human.

We’ll be exploring Matt Lewis’s performance in greater depth in a later episode of the making of— looking at how his physical work and our data pipeline combined to shape the emotional arc of the film.

Motion Matters - Fletched, Drawn, and Trained

NOCK ! DRAW! LOOSE!

We partnered with longbow experts from the Longbow Heritage Society to capture archery in motion, not from stock clips or simulations, but from real-world practice. Real archers, real bows, real weather. The way the wood bends under tension, the draw of the string, the stance, breath, and shifting weight of the body these became the visual and physical grammar we taught to the AI.

This wasn’t just technical R&D. It was a commitment to truth in form.

We are all aware of the challenges AI has with hands…. now add Archery into the mix!

current foundation models struggle to depict the precise technique of longbow archery. From the posture of the archer to the nuanced tension in the bow hand, generic AI tools often default to cinematic cliché or guesswork. That’s where our hands-on method came in. The intent to be trained on specific, correct motion ,drawing from the centuries-old discipline of traditional English longbow use, not vague modern approximations.

At the centre of this work was Carol, from Carol Archery, our incredible weapons master. She not only coached our team and demonstrated historically accurate technique, she also supplied the actual bows used on set and even fletched a bespoke arrow for us, which became a reference object for both visual capture and AI training. She’s spent a lifetime immersed in the world of longbows, and with a wry smile told us:

“Even with all the training in the world, the archers and historians I know will still say it’s wrong.”

Book a lesson with carol at :Carol Archery she’s awesome!

That arrow appears in the final film, not just as a prop, but as essential data ,a piece of handcrafted heritage woven into the generative process.

courtesy of Carol’s fletching skills

This process helped create something few AI films attempt: an embodied realism, where the movement of the archer feels not just convincing but lived-in… because it was.

This film was awesome to make. we learned Archery is very fun. It’s physical, ancient, and unexpectedly cinematic when approached with care. But more than anything, this project showed us that we’re only just scratching the surface of what AI filmmaking can become when grounded in real-world craft and culture.

This is just the cusp of our discovery , and we’re drawing the bowstring back for more.